Prior to my beta review, I had been struggling to understand how the agent behavior tree would direct changes in the agent's path. Feedback from my beta review helped to clarify this. The behavior tree will simply change the edge weights in the graph representation of the world. My agent will recalculate its path every frame to react to these weight changes.

The first step in implementing this design is to have my agent recalculate its path every frame. At first, I ran into some memory issues.. eek.

I resolved this by revising my pathfinder code. Instead of returning the entire path, the pathfinder function now only returns the next node in the path. This is sufficient since the path will be recalculated every frame (so returning the entire path is excessive). Out of memory error fixed.

I still had to tackle my bug from my previous post, where the agent would stop before arriving at the target. I identified the bug in my graph representation, where I was handling obstacle corners incorrectly. I was creating a diagonal edge around a corner when I shouldn't have. I fixed this bug, and huzzah! See the video to watch my agent arrive at its target location. (Again, the non-smooth movement is an issue with the recording's frame rate.)

Tuesday, April 19, 2011

Sunday, April 17, 2011

Path Following!

Can finally see the agent following the path calculated by A*! The frame rate of the recording isn't great, but imagine the agent moving smoothly :)

One more bug: The agent is stopping before it arrives at the target, which is indicated by the small pink square.

One more bug: The agent is stopping before it arrives at the target, which is indicated by the small pink square.

Thursday, April 14, 2011

Unity Woes

I have been having trouble understanding how access of variables between scripts works in Unity. I thought I knew how, but after much trial and error, I am still getting errors. My pathfinding code is contained in one script, and my agent behavior code is contained in another. Because of this obstacle, I have been unable to have my agent respond to the path calculated by my pathfinding code.

For example, in the screenshot below, the console shows a path count and the index. I want the index to initialize to 1 less than the path count, which it currently is not doing.

I have already read a lot of Unity manuals and tutorials, but I suppose I need to read some more...

Saturday, April 9, 2011

Some Videos

The first video shows my project implementing the Roomba behavior tree.

The second video shows in the Unity console that my project correctly creates a graph representation of the world and calculates a path from a starting to end node. The position of these nodes and the edge costs are specified in the bottom right corner.

Thursday, April 7, 2011

A* and Unity

This week I had my beta review with Joe and Professor Badler. The review was extremely helpful in confirming my approach thus far and in generating ideas for how to get past some of the road blocks I am facing. Another point of feedback was to post to my blog more frequently -- even if I do not have a result to show, it's important to share and discuss the problems I am trying to work through.

At my beta review, I showed my progress since the alpha review:

- A* pathfinding implemented in C++. I am more familiar with C++ than C#, so I wanted to focus on implementing the logic of the algorithm rather than struggling with syntax.

- Graph creation in Unity. I needed a graph representation of the nodes and their neighbors in Unity before I could implement pathfinding. Each node has 8 neighbors (since my map is a square grid). I spent a lot of time here reading more about Unity and C#.

- A* pathfinding in Unity. I can specify start and end nodes and the code will generate the correct path. I am still trying to understand how Unity's physics engine syntax works, so I am still working to have my agent respond correctly to the paths generated. This will be my major task for the week.

I plan to have my behavior tree dynamically recalculate weights on the graph to influence the paths generated by the A* algorithm. Because I will be making constant calls to A*, I want to go over my code again and try to make it as efficient as possible.

At my beta review, I showed my progress since the alpha review:

- A* pathfinding implemented in C++. I am more familiar with C++ than C#, so I wanted to focus on implementing the logic of the algorithm rather than struggling with syntax.

- Graph creation in Unity. I needed a graph representation of the nodes and their neighbors in Unity before I could implement pathfinding. Each node has 8 neighbors (since my map is a square grid). I spent a lot of time here reading more about Unity and C#.

- A* pathfinding in Unity. I can specify start and end nodes and the code will generate the correct path. I am still trying to understand how Unity's physics engine syntax works, so I am still working to have my agent respond correctly to the paths generated. This will be my major task for the week.

I plan to have my behavior tree dynamically recalculate weights on the graph to influence the paths generated by the A* algorithm. Because I will be making constant calls to A*, I want to go over my code again and try to make it as efficient as possible.

Friday, April 1, 2011

Integration

This week I've been working to integrate my A* code with Unity in preparation for beta reviews. Still have some polishing to do, videos to come soon!

Thursday, March 24, 2011

Pathfinding

This week I finished up the A* algorithm. Now that I have basic pathfinding implemented, I'd like to integrate it with Unity so that I can give an agent a target destination and have it move to the target using the calculated path. Right now, the implementation assumes that obstacles are not moving, which of course is an unrealistic assumption and an area that I will need to revisit later. Another next step will be to code a basic AI for "wandering" enemy movement.

As far as self evaluation goes, I feel that these past few weeks have been more difficult in terms of balancing senior design with my other classes. I felt that my alpha review went well and that my work earlier in the semester helped me build a strong foundation for my project. Now I just need to set aside more time to actually coding and implementing my framework. It seems sometimes that the deeper I get into the project, the more tasks I discover and need to add to my to do list (such as basic AI for enemies).

Current task list:

- Integrate pathfinding with Unity

- Enemy AI for wandering movement

- Visibility algorithm

- Updating A* for moving enemies

- Implement behaviors

Needless to say, I have a lot of hard work ahead of me! The self evaluation was helpful in recognizing this--thanks Joe!

As far as self evaluation goes, I feel that these past few weeks have been more difficult in terms of balancing senior design with my other classes. I felt that my alpha review went well and that my work earlier in the semester helped me build a strong foundation for my project. Now I just need to set aside more time to actually coding and implementing my framework. It seems sometimes that the deeper I get into the project, the more tasks I discover and need to add to my to do list (such as basic AI for enemies).

Current task list:

- Integrate pathfinding with Unity

- Enemy AI for wandering movement

- Visibility algorithm

- Updating A* for moving enemies

- Implement behaviors

Needless to say, I have a lot of hard work ahead of me! The self evaluation was helpful in recognizing this--thanks Joe!

Thursday, March 17, 2011

Back to Work

Spring break was a nice break from work. Now back to it!

I've been thinking more about my visibility detection problem this week. I think I will start with a simple detection method for now and add to it once I've got a basic version working. A basic implementation would be to keep checking if an agent is in an enemy's line of sight as agents and enemies move over time.

The next conceptual problem I wanted to tackle was the Hide behavior. The Hide behavior is more difficult than Walk and Run because Hide needs to move the agent into a space on the map that will block its visibility from approaching enemies. A simple way of achieving this objective would be to identify hiding spaces on the map and have the agent calculate a path to that target hiding spot.

I've also been working on implementing A* this week. The main reference I have been using has been this article. My goal is to have the algorithm implemented and tested by the end of the weekend, so look for more updates then!

Also, before spring break, I had updated my design document, which you can find here.

I've been thinking more about my visibility detection problem this week. I think I will start with a simple detection method for now and add to it once I've got a basic version working. A basic implementation would be to keep checking if an agent is in an enemy's line of sight as agents and enemies move over time.

The next conceptual problem I wanted to tackle was the Hide behavior. The Hide behavior is more difficult than Walk and Run because Hide needs to move the agent into a space on the map that will block its visibility from approaching enemies. A simple way of achieving this objective would be to identify hiding spaces on the map and have the agent calculate a path to that target hiding spot.

I've also been working on implementing A* this week. The main reference I have been using has been this article. My goal is to have the algorithm implemented and tested by the end of the weekend, so look for more updates then!

Also, before spring break, I had updated my design document, which you can find here.

Thursday, March 3, 2011

Alpha Review Feedback

Check out my alpha review video from last Friday here.

This week, I got comments back from my alpha review. Overall, the feedback was positive. There were a number of comments and input on how to model visibility as well as the behavior trees for the basic actions (Walk, Run, Hide, Flee).

I had 3 midterms, 1 paper, and a quiz this week, so unfortunately I don't have much to report on the senior design front.

Next steps

This week, I got comments back from my alpha review. Overall, the feedback was positive. There were a number of comments and input on how to model visibility as well as the behavior trees for the basic actions (Walk, Run, Hide, Flee).

I had 3 midterms, 1 paper, and a quiz this week, so unfortunately I don't have much to report on the senior design front.

Next steps

- Implement A*

- Figure out how to model visibility

- Flesh out more detailed trees for basic actions

Friday, February 25, 2011

Improved Behavior Tree + Unity

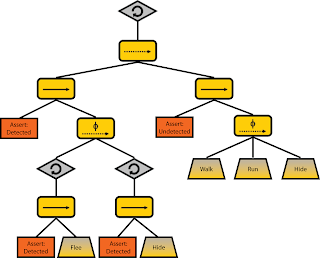

This week I worked to use the feedback I had received to improve my behavior tree.

Here is the logic behind the new and improved tree:

Here is the logic behind the new and improved tree:

- Assumption: The enemy is all-knowing (knows where enemies are, where target is, etc)

- 4 basic actions: Walk, Run, Flee, Hide

- Note that these are subtrees (since they are behavior trees themselves

- 2 agent states: Detected, Undetected

- When Detected, Flee or Run until Undetected

- When Undetected, continue to make progress toward goal, unless Detected

- In any given state, the way an agent decides which action to take depends on its risk attitude

- Each agent will have parameter r_loving and r_averse, which sum to 1.0

- These parameters will be passed into stochastic selectors to determine which action to take next

- (I also plan to incorporate enemy distance into the calculation when computing probabilities for stochastic selectors, but I haven't come up with a precise equation yet)

I also played around some in Unity. I am now able to dynamically create an arbitrary map from a .txt config file in Unity. The camera view is orthographic, since I am working in 2D. I can also use the arrow keys to move my agent around for testing.

Things to do next:

- I'd like to add a visual marker on the agent to indicate what direction he's facing

- Have the camera following the target

- A* pathfinding

Thursday, February 17, 2011

Learning Unity

This week, I did some more reading on behavior trees and have new ideas for how to structure my behavior tree.

To better understand behavior trees and why they're effective, it was helpful to first do some background reading on hierarchical finite state machines and hierarchical task network planners, two other commonly used methods for AI development. HFSMs is simply a hierarchy of FSMs, which allows us to take advantage of states that share common transitions. The downside is that, while transitions can be reused, the states are not modular and so are not easily reusable.

HTNs on the other hand take in an initial state, desired goal, and set of possible tasks to produce a sequence of actions that lead from the initial state to the goal. Constraints are represented in task networks, which can get very complex very quickly.

Behavior trees simplify these representations by distilling them into distinct behaviors. Using selectors, sequences, and decorators, you can pretty much build any complex behavior from these simple operations.

The behavior tree approach is very different from the initial research and literature review I conducted on stealthy agents. The methods used in my initial research, such as corridor maps and knowledge-based probability maps, rely on heavy preprocessing of environmental variables to calculate an optimal path. This does not allow the agent to react as easily to a changing environment. The ability for an agent to react to changes in its environment is a definite advantage of using behavior trees.

To improve my behavior tree, I plan to categorize behaviors into undetected and detected. Undetected behaviors will be further broken down into risk-loving, risk-neutral, and risk-averse behaviors. These attitudes toward risk will guide the agent's decision-making (such as whether he should he run across an open space and risk being detected by an enemy unit). Detected behaviors will include strategies that the agent should employ once it has been detected by enemy, such as running away quickly, running and then hiding in a hiding place, etc. Once the agent has evaded detection, he should resume undetected behaviors to get to the target location. I'll need to break this down further, but that's the big picture strategy for now.

I also started playing around in Unity and attended the Unity tutorial on Sunday. I'm working to get basic movement of the agent. I have him moving, but have had some trouble getting the collision detection to work.

To better understand behavior trees and why they're effective, it was helpful to first do some background reading on hierarchical finite state machines and hierarchical task network planners, two other commonly used methods for AI development. HFSMs is simply a hierarchy of FSMs, which allows us to take advantage of states that share common transitions. The downside is that, while transitions can be reused, the states are not modular and so are not easily reusable.

HTNs on the other hand take in an initial state, desired goal, and set of possible tasks to produce a sequence of actions that lead from the initial state to the goal. Constraints are represented in task networks, which can get very complex very quickly.

Behavior trees simplify these representations by distilling them into distinct behaviors. Using selectors, sequences, and decorators, you can pretty much build any complex behavior from these simple operations.

The behavior tree approach is very different from the initial research and literature review I conducted on stealthy agents. The methods used in my initial research, such as corridor maps and knowledge-based probability maps, rely on heavy preprocessing of environmental variables to calculate an optimal path. This does not allow the agent to react as easily to a changing environment. The ability for an agent to react to changes in its environment is a definite advantage of using behavior trees.

To improve my behavior tree, I plan to categorize behaviors into undetected and detected. Undetected behaviors will be further broken down into risk-loving, risk-neutral, and risk-averse behaviors. These attitudes toward risk will guide the agent's decision-making (such as whether he should he run across an open space and risk being detected by an enemy unit). Detected behaviors will include strategies that the agent should employ once it has been detected by enemy, such as running away quickly, running and then hiding in a hiding place, etc. Once the agent has evaded detection, he should resume undetected behaviors to get to the target location. I'll need to break this down further, but that's the big picture strategy for now.

I also started playing around in Unity and attended the Unity tutorial on Sunday. I'm working to get basic movement of the agent. I have him moving, but have had some trouble getting the collision detection to work.

Thursday, February 10, 2011

Behavior Tree

This week, I developed a first draft of a behavior tree to model agent behavior. Behaviors I considered included: walking, changing speed, hiding, fleeing. These behaviors react to changing conditions in the environment, specifically with respect to the location of enemies. It's still a rough model, so I welcome any feedback/ideas!

Thursday, February 3, 2011

Incorporating Feedback

While my initial research on the corridor map method (CMM) and knowledge-based probability maps was helpful in understanding the latest research developments in stealthy pathfinding, I realized this week that these approaches may not be the best starting point for my project. Feedback from Joe and Norm suggested that I look into behavior trees as a way of representing the behavior of my stealth agents.

After meeting with Joe this week, I got a better sense of how to break down my project. I will need to spend some time setting up my development framework and learning the tools (C# and Unity) I need for my project. This week, I will play around with C# and Unity, try to create a 2D map in Unity, and draft a behavior tree for feedback.

After meeting with Joe this week, I got a better sense of how to break down my project. I will need to spend some time setting up my development framework and learning the tools (C# and Unity) I need for my project. This week, I will play around with C# and Unity, try to create a 2D map in Unity, and draft a behavior tree for feedback.

Thursday, January 27, 2011

More research

Paper papers papers! This week, I've continued my research on stealthy pathfinding methods. I found a set of slides from the author of one of my sources, "Stealth-Based Path Planning using Corridor Maps". The slides give a great overview and evaluation of various pathfinding methods over the years, demonstrating the evolution, advantages, and disadvantages of the methods. It also provides additional implementation details on the corridor map method described in the paper.

This next week, I plan to apply the research I've done (and any additional research) to improve and add to the framework for my project. I feel like as of now, my research has shown me all the different ways I can approach the pathfinding problem -- now I need to distill the research and figure out what's best for my project.

This next week, I plan to apply the research I've done (and any additional research) to improve and add to the framework for my project. I feel like as of now, my research has shown me all the different ways I can approach the pathfinding problem -- now I need to distill the research and figure out what's best for my project.

Project Documents

A quick summary of my senior design project:

In many games today, NPCs must move in the world without being detected by the player or other NPCs. These games required NPCs to be intelligent in evasion and avoidance. The purpose of this project is to develop a general AI for intelligent sneaking and hiding behaviors for agents in games. The agents will navigate around moving obstacles in a 2D plane to arrive at a target location undetected. This algorithm can be plugged into any game requiring stealthy NPC behavior.

The algorithm will be demonstrated in a game overlay where an agent must complete a “capture the flag” task that requires it to navigate through an environment with patrolling guards and obstacles.My design document can be found here.

Subscribe to:

Posts (Atom)