This week, I did some more reading on behavior trees and have new ideas for how to structure my behavior tree.

To better understand behavior trees and why they're effective, it was helpful to first do some background reading on hierarchical finite state machines and hierarchical task network planners, two other commonly used methods for AI development. HFSMs is simply a hierarchy of FSMs, which allows us to take advantage of states that share common transitions. The downside is that, while transitions can be reused, the states are not modular and so are not easily reusable.

HTNs on the other hand take in an initial state, desired goal, and set of possible tasks to produce a sequence of actions that lead from the initial state to the goal. Constraints are represented in task networks, which can get very complex very quickly.

Behavior trees simplify these representations by distilling them into distinct behaviors. Using selectors, sequences, and decorators, you can pretty much build any complex behavior from these simple operations.

The behavior tree approach is very different from the initial research and literature review I conducted on stealthy agents. The methods used in my initial research, such as corridor maps and knowledge-based probability maps, rely on heavy preprocessing of environmental variables to calculate an optimal path. This does not allow the agent to react as easily to a changing environment. The ability for an agent to react to changes in its environment is a definite advantage of using behavior trees.

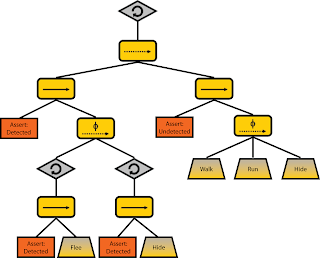

To improve my behavior tree, I plan to categorize behaviors into undetected and detected. Undetected behaviors will be further broken down into risk-loving, risk-neutral, and risk-averse behaviors. These attitudes toward risk will guide the agent's decision-making (such as whether he should he run across an open space and risk being detected by an enemy unit). Detected behaviors will include strategies that the agent should employ once it has been detected by enemy, such as running away quickly, running and then hiding in a hiding place, etc. Once the agent has evaded detection, he should resume undetected behaviors to get to the target location. I'll need to break this down further, but that's the big picture strategy for now.

I also started playing around in Unity and attended the Unity tutorial on Sunday. I'm working to get basic movement of the agent. I have him moving, but have had some trouble getting the collision detection to work.