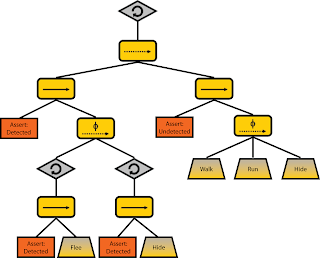

Here is the logic behind the new and improved tree:

- Assumption: The enemy is all-knowing (knows where enemies are, where target is, etc)

- 4 basic actions: Walk, Run, Flee, Hide

- Note that these are subtrees (since they are behavior trees themselves

- 2 agent states: Detected, Undetected

- When Detected, Flee or Run until Undetected

- When Undetected, continue to make progress toward goal, unless Detected

- In any given state, the way an agent decides which action to take depends on its risk attitude

- Each agent will have parameter r_loving and r_averse, which sum to 1.0

- These parameters will be passed into stochastic selectors to determine which action to take next

- (I also plan to incorporate enemy distance into the calculation when computing probabilities for stochastic selectors, but I haven't come up with a precise equation yet)

I also played around some in Unity. I am now able to dynamically create an arbitrary map from a .txt config file in Unity. The camera view is orthographic, since I am working in 2D. I can also use the arrow keys to move my agent around for testing.

Things to do next:

- I'd like to add a visual marker on the agent to indicate what direction he's facing

- Have the camera following the target

- A* pathfinding

No comments:

Post a Comment